Ivy (core workflow)

2025Ivy cheers for your successes and is forgiving when things don't go as planned. It aims to be timeless by being modeled after the natural cycle of day and night. You start by planning the day ahead, then you commit and make progress, and finally you reflect on what you've done. The interface leans heavily on these three modes, allowing each to stay intuitive and free of clutter.

Planning mode: The lock animation was prototyped in Rive. The final animation was implemented in SwiftUI to be more lightweight. The orange background glow is rendered using a shader and signed distance function over traditional gaussian blur for improved performance.

Doing mode: Completing items plays a short animation masked by randomized simplex noise. After a short debounce, the items are removed from the list and the progress circle grows bigger to visualize your progress. The diffed arc is rendered using a bright HDR white that pops on device.

Sealed mode: The 3D sparkles of the end-of-day animation are rendered procedurally on device (the implementation abstracts away SceneKit; engineers can embed 3D content in regular SwiftUI view layout). A carefully orchestrated sequence of animations across the screen coupled with light randomization, physics-based motion, and custom haptics make the animation feel fresh every time.

Ivy beta website

2025Built with SvelteKit. The hero animation was modeled and rendered in Blender. The site features multiple interactive 3D scenes and a Firefly simulation with emergent behavior, mirroring the app's peer-to-peer philosophy.

Inbox (prototype)

2024Built over the holidays. A local-first save-it-for-later app. It extracts highlights from websites and PDFs via OpenAI's JSON outputs API, saves them to an on-device vector store, and lets you write in Markdown. Search combines sparse (keyword) and dense (semantic) results, and each note links out to semantically similar notes for playful exploration. If a location is detected, a map appears.

The most visually complex component is the card: the title sits on the content without obscuring it, overlapping by only a few pixels. To create the illusion that the image continues beneath the title, a Metal shader repeats the bottom pixels, applies progressive blur, and overlays a gradient derived from the image to hide the seam.

Other favorites: the add button morphs into the composer, which then morphs into the list. Background colors are sampled from the page's Open Graph image and tuned in LCh to lift chroma and lightness perceptually.

Semantical pre-product website

2024WebGL2 background (shown here at 3x speed) rendered by multi-layer Voronoi on an optimized GLSL toolchain (glslang/spirv) resulting in only a tiny impact on bundle size. Cursor interaction runs on a lightweight, friction-based physics model. JSON config enables rapid visual iteration. Display-P3 and reduced-motion supported.

SnapSum

2023SnapSum was an app I built in high school using Apple's on-device vision models and OpenAI's GPT-3.5. It can answer questions, generate summaries, and create mind maps from text in photos.

My favorite interaction was the photo-taking experience. The captured image crop is frozen in the viewfinder, then recedes smoothly into the stack after it's been processed. Finally it animates into the tapped action's button. The toughest part was working with low-level camera APIs to achieve certain visual effects without draining the battery.

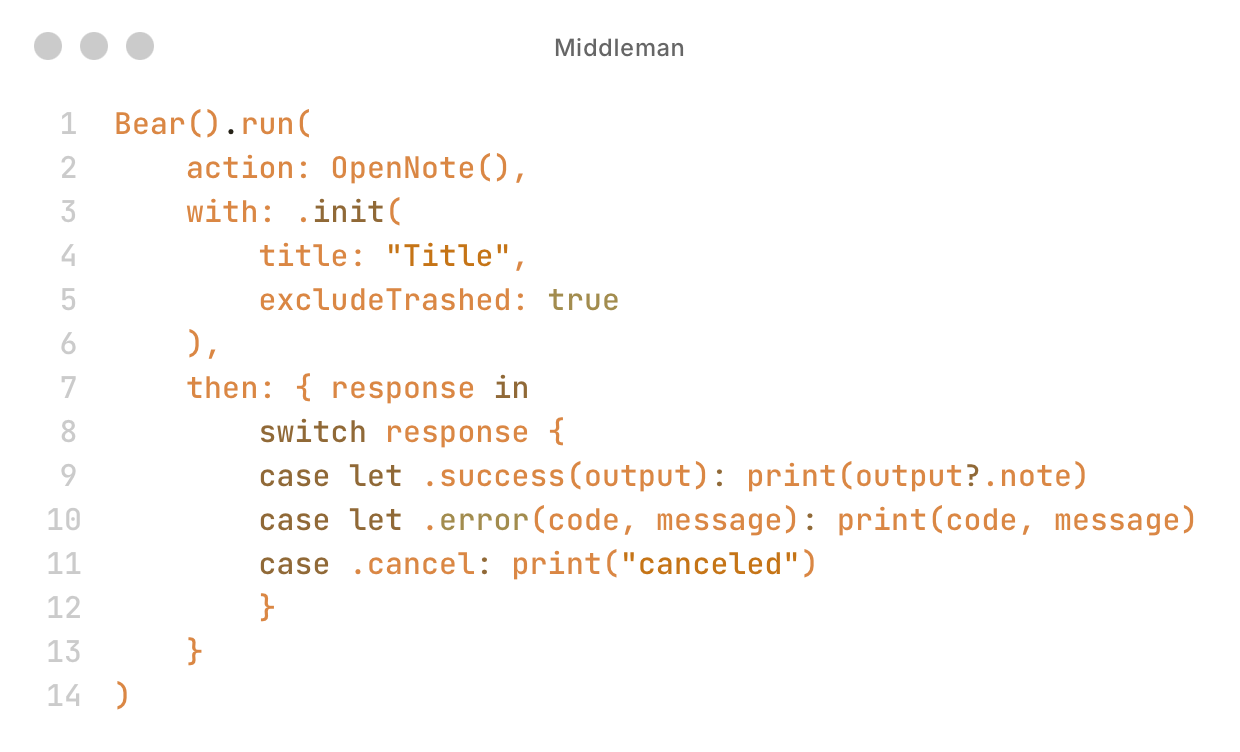

Middleman & Honey

2020Middleman is a Swift framework for x-callback-url. It is type-safe and easy to use compared to the Objective-C options at the time. Honey (via Middleman) wraps x-callback-url for the Bear notes app, enabling automation from Swift.

I built them to automate my daily journaling, like creating a new note from a template and carrying over unfinished to-dos (a flow that ultimately evolved into Ivy).

Because these were my first public projects that garnered some attention, and written in my first programming language Swift (which I love deeply), they still hold a special place in my heart.